Your AI Agent Did Something Last Tuesday and Nobody Can Tell You What

"You're a developer. You've got an AI agent doing something actually important — code review, infrastructure configs, customer data." "Because what you're about to discover is that you have essentially zero ability to answer 'what did my agent do and why?'"

Let me set the scene. You're a developer. You've got an AI agent doing something actually important — code review, infrastructure configs, customer data. Last Tuesday it produced an output. Someone on your team said "this doesn't look right." Now you need to figure out what happened.

Good luck.

Because what you're about to discover is that you have essentially zero ability to answer "what did my agent do and why?" You'll dig through logs that capture maybe 30% of what happened. You'll re-run the agent and get a completely different result. You'll realize the prompt changed, or the model version drifted, or one of the fourteen tools the agent called updated its API last Thursday.

The "It Works on My Machine" Problem, Except Worse

We solved this for regular software a long time ago. Not perfectly, but well enough. Version control. Reproducible builds. Docker. Lockfiles. If your CI pipeline produces a weird artifact, you can go look at the exact commit, the exact dependencies, the exact environment, and figure out what happened.

With AI agents? We've taken thirty years of hard-won infrastructure wisdom and thrown it in the garbage. "The agent used GPT-4? Which version? What was the system prompt? What tools were available? What was the temperature? What did the intermediate tool calls return?"

Why Deterministic Replay Isn't a Nice-to-Have

When I say "replay," I don't mean "run the agent again and hope you get something similar." That's not replay. Replay means: take the exact inputs, configuration, tool versions, and decision sequence, and produce the exact same steps and outputs. When you can't — because some dependency changed or the model is non-deterministic — the system tells you exactly which step diverged and why.

This matters for debugging. But it matters more for trust. If you can't replay an agent's work, you can't audit it. If you can't audit it, you can't explain it when something goes wrong.

Context Packs: The Idea That Should've Been Obvious

Here's the concept. I'm calling it a Context Pack: capture everything about an agent run in an immutable, content-addressed bundle.

Everything:

- The prompt and system instructions

- Input files (or content-addressed references)

- Every tool call and its parameters

- Model identifier and parameters

- Execution order and timestamps

- Environment metadata — OS, runtime, tool versions

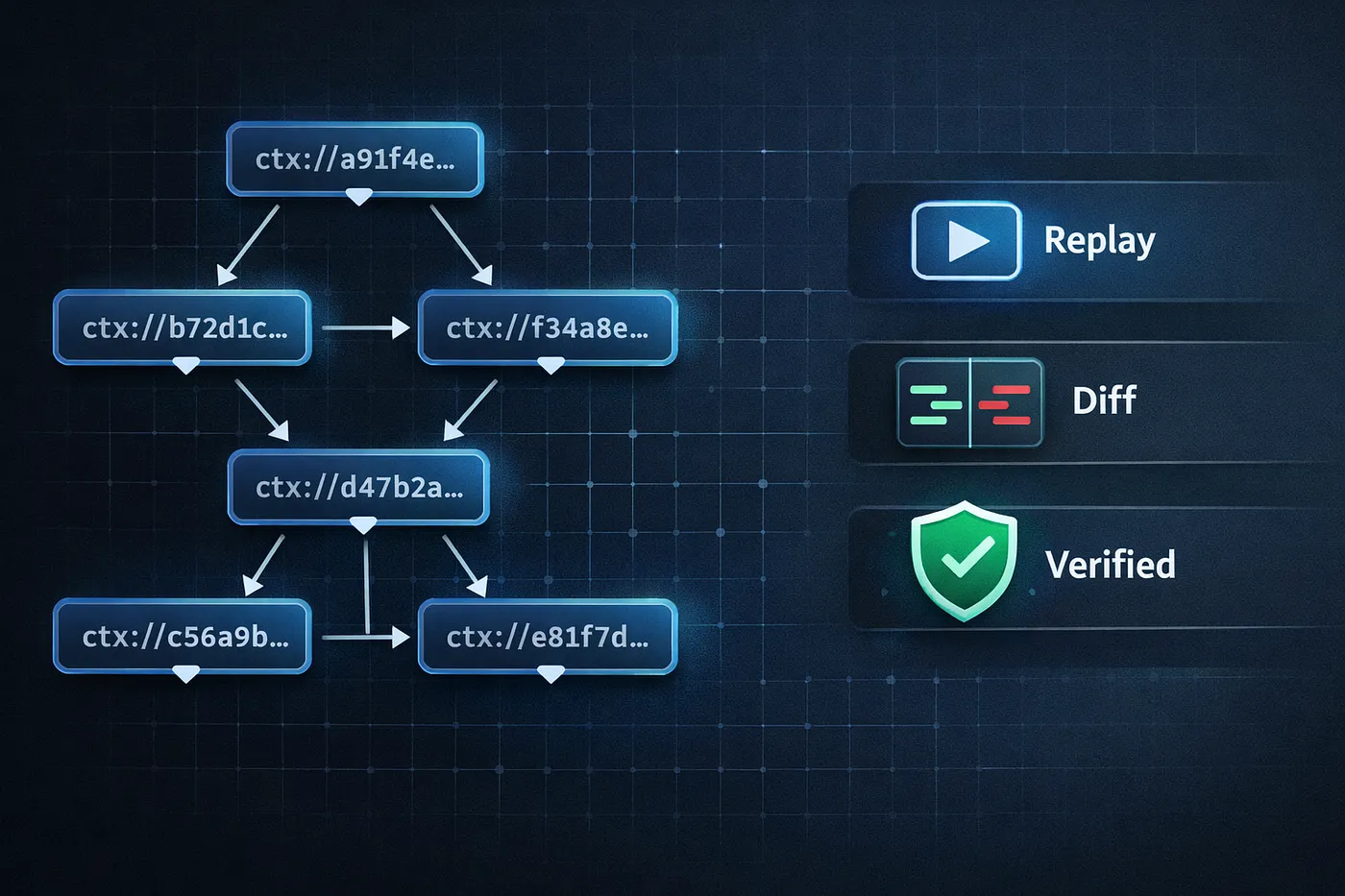

You hash it. You get ctx://. That hash is the run. Immutable. Portable. You hand it to someone on a different team, different continent, different OS — they can see exactly what happened. Replay it. Fork it. Diff it against another run.

(The spec for this is on GitHub if you want to look under the hood.)

Diffing Agent Decisions Like a Sane Person

Once you have Context Packs, you can diff them. Not just "the outputs are different" — you can identify where the decision chain diverged.

You ran your agent Monday. Reasonable code review. Ran it Wednesday with the same inputs. Something unhinged. Without Context Packs, you're squinting at two outputs and guessing. With them:

1ctx diff <monday-hash> <wednesday-hash>You get back: "The model version changed from gpt-4-0125 to gpt-4-0215. The search_codebase tool returned different results because the index was updated Tuesday. The third reasoning step diverged because of the different search results, cascading into a different tool selection at step five."

Contestable Outputs

This is the one that matters most and gets thought about least.

Every artifact your agent produces should be traceable back to the Context Pack that produced it. The generated code, the summarized document, the infrastructure config — all of it should carry metadata saying "I was produced by ctx://abc123, using these inputs and tools, with this confidence level."

Because eventually someone is going to challenge an output. A security reviewer. A compliance officer. A customer who got a weird response. When they do, you need to pull the thread all the way back.

1ctx verify <artifact>That tells you everything. Where it came from. What went into it. Whether the run can be replayed.

The Social Contract

Context Packs are shareable by hash. Not by environment. Not by platform. Not by "sign up for our observability dashboard." By hash.

1ctx fork <context-hash>Take someone's Context Pack. Fork it. Change the prompt. Swap a tool. Replay. Diff. Share your fork. It's git for agent decisions. If you can share the hash, you can reproduce the work.

What This Isn't

This is infrastructure. A substrate. The layer underneath your agent framework. It doesn't orchestrate agents. It doesn't help you write prompts. It doesn't search vectors. It doesn't phone home to anybody's cloud. It captures what happened, makes it reproducible, diffable, and shareable.

The implementation is deliberately boring: CLI in Go. Context Packs as JSON with content-addressed blobs. Local filesystem, git-compatible layout. Optional MCP adapter eventually.

---

Tags: AI Agent Development · AI Agent Context · Context Engineering AI · Coding Agent Memory · Reproducibility

Share this article

Article: Your AI Agent Did Something Last Tuesday and Nobody Can Tell You What

URL: /blog/your-ai-agent-did-something-last-tuesday-and-nobody-can-tell-you-what